The TIB AV-Portal in 2023: AI-based speech recognition, high-definition and dynamic frontend

The TIB AV-Portal is an open and free platform designed specifically for scientific videos. It offers numerous services for the professional use of AV media in science, including permanent citation, long-term archiving and targeted searches in video content. The portal is characterized by an ad-free, secure and privacy-compliant environment that is tailored to the needs of the academic community. Since 2018, the portal has been further developed by a Scrum team at TIB, which consists of four developers, a product owner and a Scrum master. The team achieved a high degree of independence from third-party providers in recent years, allowing it to implement most of its requirements independently. We have been reporting on our further developments once a year since 2021. In this blog article, we present the latest features that were implemented in 2023.

Speech recognition with Whisper

Whisper is an AI model developed by OpenAI that converts spoken language into searchable text. It can transcribe 97 languages, translate numerous languages into English and process accents and dialects well. It is particularly characterized by its high accuracy and efficiency in language processing.

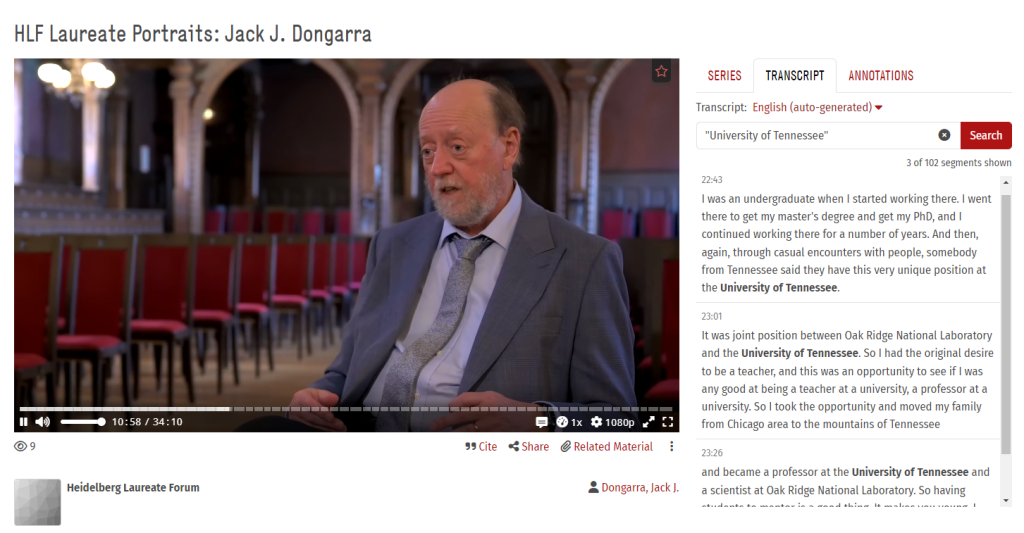

Since July 2023, all newly added videos have been transcribed by Whisper. The transcripts are used in the AV-Portal both as subtitles and as notations of the spoken language that can be searched. Users have the option of filtering the transcripts by specific search terms and navigating directly to the corresponding video sections. In addition, the transcripts can be downloaded and used for other purposes. In the future, we will also integrate Whisper’s translation function to improve multilingual search and understanding. This will make it possible to search in Spanish-language videos with English terms and display the corresponding English subtitles. There are also plans to re-transcribe the AV-Portal’s old content with Whisper in order to take advantage of the higher recognition accuracy compared to the old language models.

From standard to high definition

In the past, the media asset management system (MAM) of a third-party provider was used to create video derivatives. The video quality was just below HD. We now create several derivatives ourselves in various resolutions, from 240p to 1080p (Full HD). These derivatives are currently still delivered via MAM, but this will change in the foreseeable future (see Outlook). The HD quality that we now offer in the AV-Portal clearly exceeds the old quality. This is particularly noticeable in slides with small fonts, which are now much clearer to read. By default, we play the derivative with the highest available resolution, whereby the user has the option of selecting different quality levels. In future, the resolution will be automatically adjusted to the available bandwidth.

Reconfiguration for a more reactive and scalable frontend

In 2023, the AV-Portal’s Scrum team migrated the individual pages of the video portal, which were developed in the Wicket frontend framework, to the Vue.js framework over a period of several months. In Wicket, changes to the user interface are executed through complete page reloads or partial loads (Ajax). In contrast, with Vue the browser only loads a single HTML page initially; all other content and components are updated dynamically, i.e. without the page having to be completely reloaded. This allows Vue to provide a more dynamic and reactive user interface compared to the more traditional, server-side approach of Wicket. Vue also offers significant advantages for scaling frontend projects through its component-based architecture, which simplifies the organization and maintenance of large applications.

Optimization of the search function in the AV-Portal

In summer 2022, we created lists of synonyms and English translations for all subjects in the AV-Portal. These are based on data from the open data dump of the Integrated Authority File (GND). In addition to the search terms entered by the user, these synonyms and translations are also queried in order to increase the number of relevant search results. The generation of these lists was largely automated in 2023. This allows us to efficiently integrate the current data, which is published approximately every three months in the GND dump, into our system.

Transcripts of the spoken language can be filtered by search terms on the video detail page so that users can navigate directly to the sections of the video that interest them most. This feature is particularly interesting because, on the one hand, the main content of the video is conveyed via speech and, on the other hand, the transcript quality has achieved a high level of accuracy following the integration of Whisper.

Videos with more views, i.e. a higher number of plays, are now ranked slightly higher. This means that videos with similar metadata matches (such as in titles or keywords) but different numbers of views are now ranked differently. This means that videos with more plays appear higher up in the search results. This boosting ensures that users come across more popular content more quickly under similar circumstances.

New highlighting function

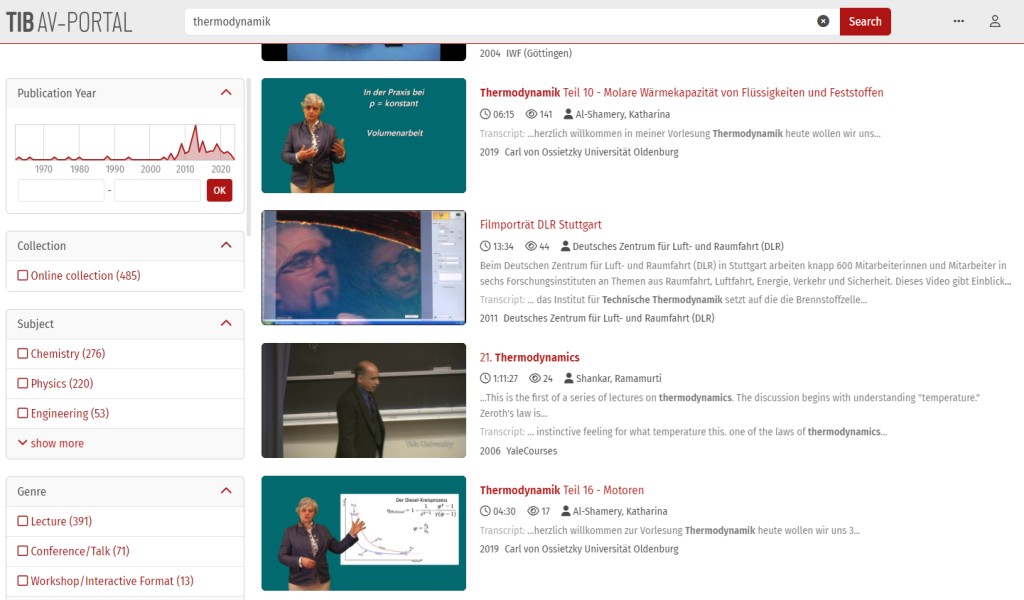

After the Vue migration was completed, highlighting was reactivated. This means that all search terms as well as their synonyms and translations are highlighted in bold in the search results. For example, if the user enters ”Thermodynamik”, ”Wärmelehre” and ”thermodynamics” are highlighted in bold as well as ”Thermodynamik”. In addition, highlighting has been expanded so that hits in the transcripts are now also highlighted. This function makes it easier for users to quickly recognize whether a video matches their search queries or interests.

Modernisation of the media player

The video player has been completely renewed to make it compatible with the modern frontend framework Vue. First of all, all controls in the player have been implemented from scratch so that we now have complete control over all elements. In addition, a video preview has been integrated into the preview image of the final screen that appears after playing. Thanks to Vue, only the updated content is now loaded when users navigate from one detail page to the next. This makes browsing through the video content faster and smoother.

Outlook on future developments

There are some exciting developments and planned innovations that we want to tackle in the coming months. We are already well advanced with some of these projects, while others are in the experimental phase.

On the way to independent hosting

We completed essential preparations for video hosting at TIB in 2023. In the near future, we will receive a hosting server from the IT department, which we can use to deliver our derivatives ourselves. This means that derivative creation and video delivery will be handled entirely by TIB.

MPEG-DASH for adaptive streaming

We are currently working on a solution for streaming multimedia content: MPEG-DASH, short for ’Dynamic Adaptive Streaming over HTTP’. This technology enables flexible and efficient transfer of video content over the Internet. Our approach involves the creation of individual files for different quality levels in MPEG-DASH. The special feature here is that the player automatically switches between these quality levels – depending on the current bandwidth. At a higher bandwidth, the player jumps to a file with a higher resolution and reduces the resolution accordingly at a lower bandwidth. This guarantees continuous playback without interruptions or long loading times, even if the network bandwidth fluctuates.

Server-side rendering

At the moment, we are experimenting with server-side rendering. This method is characterized by the fact that website content is already prepared on the server before it is transmitted to the client – such as a web browser. This differs from the client-side rendering method currently used in the AV-Portal, in which the content is rendered directly in the browser. Server-side rendering offers benefits such as faster loading times and better detection by search engine crawlers.

Image recognition with Open Clip

Together with the Visual Analytics research group, we have experimented with an Open Clip model that contains an image and text encoder. Like Whisper, Clip was developed by OpenAI. It was trained with a large number of images and the corresponding descriptive texts. Its strength lies in its ability to recognize which texts belong to which images. This ability makes it possible to carry out zero-shot searches in our video collection. This means that users are able to find videos by simply entering descriptive texts without the system having been trained for these specific texts or videos in advance. As a first step, we plan to use CLIP to index specific image content such as bridges, generators or machines in order to enable faceted searches. Looking further into the future, we could even imagine offering zero-shot searches for image content as a special use case.

... ist Product Owner des TIB AV-Portals // ... is product owner of the TIB AV Portal